Can I trust my anomaly detection system? A case study based on explainable AI

Published in xAI 2024 | Explainable Artificial Intelligence, 2024

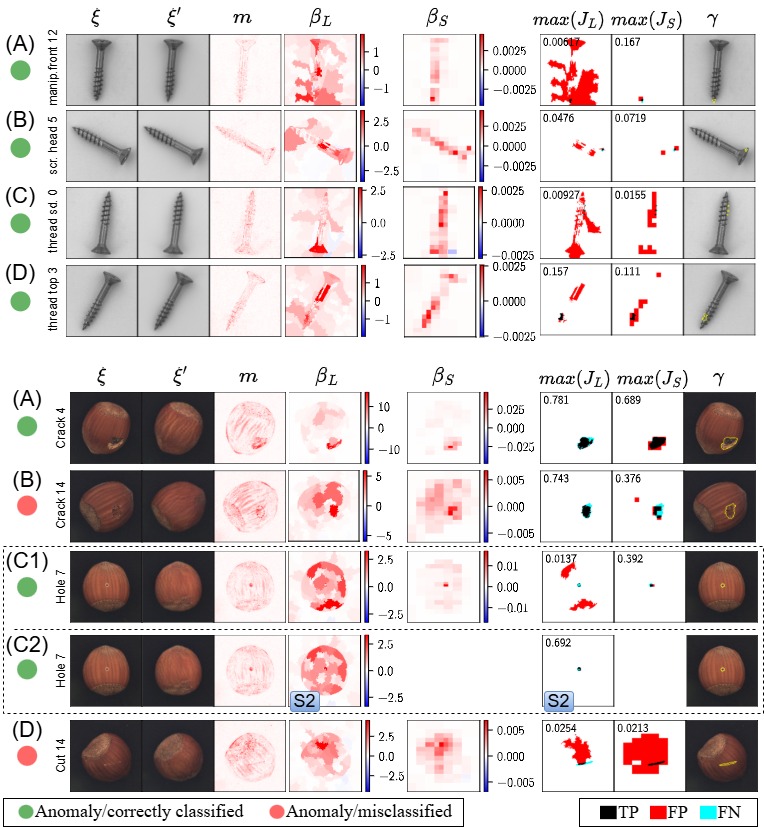

Generative models based on variational autoencoders are a popular technique for detecting anomalies in images in a semi-supervised context. A common approach employs the anomaly score to detect the presence of anomalies, and it is known to reach high level of accuracy on benchmark datasets. However, since anomaly scores are computed from reconstruction disparities, they often obscure the detection of various spurious features, raising concerns regarding their actual efficacy. This case study explores the robustness of an anomaly detection system based on variational autoencoder generative models through the use of eXplainable AI methods. The goal is to get a different perspective on the real performances of anomaly detectors that use reconstruction differences. In our case study we discovered that, in many cases, samples are detected as anomalous for the wrong or misleading factors.

- Special Session: Explainable AI for improved human-computer interaction

- Conference: xAI-2024 (Explainable Artificial Intelligence)

- Link to talk: https://xaiworldconference.com/2024/timetable/event/s-17-a-1

Contributions 📃

In this research, we:

- Review an explainable Anomaly Detection system architecture that combines VAE-GAN models with the LIME and SHAP explanation methods;

- Quantify the capacity of the Anomaly Detection system in performing anomaly detection using anomaly scores;

- Use XAI methods to determine if anomalies are actually detected for the right reason by comparing with a ground truth. Results show that it is not uncommon to find samples that were classified as anomalous, but for the wrong reason. We adopt a methodology based on optimal Jaccard score to detect such samples.

Method Availability

- The method is available under: https://github.com/rashidrao-pk/anomaly_detection_trust_case_study

- Links: GitHub Paper PDF on Arxiv Proceedings of XAI, Download Slides

Datasets and Models

- Dataset: MVTec (Hazelnut and Screw)

- Model: Variational_AutoEncoder-Generative_Adverserial_Network (VAE-GAN)

Workflow

Results

Authors ✍️

| Sr. No. | Author Name | Affiliation | Google Scholar | | :–: | :–: | :–: | :–: | | 1. | Muhammad Rashid | University of Torino, Dept. of Computer Science, Torino, Italy | Muhammad Rashid | | 2. | Elvio G. Amparore | University of Torino, Dept. of Computer Science, Torino, Italy | Elvio G. Amparore | | 3. | Enrico Ferrari | Rulex Innovation Labs, Rulex Inc., Genova, Italy | Enrico Ferrari | | 4. | Damiano Verda | Rulex Innovation Labs, Rulex Inc., Genova, Italy | Damiano Verda |

Keywords 🔍

Anomaly detection · variational autoencoder · eXplainable AI

Citation (bibtex)

@InProceedings{10.1007/978-3-031-63803-9_13, author="Rashid, Muhammad and Amparore, Elvio and Ferrari, Enrico and Verda, Damiano", editor="Longo, Luca and Lapuschkin, Sebastian and Seifert, Christin", title="Can I Trust My Anomaly Detection System? A Case Study Based on Explainable AI", booktitle="Explainable Artificial Intelligence",

year="2024", publisher="Springer Nature Switzerland",

address="Cham", pages="243--254"}

Recommended citation: Rashid,Muhammad et al. (2024). "." In World Conference on Explainable Artificial Intelligence, pp. 243-254. Cham: Springer Nature Switzerland, 2024.

Download Paper | Download Slides